Oh What Are You Doing With the Camera Not Again

The Android framework includes support for various cameras and camera features bachelor on devices, allowing you lot to capture pictures and videos in your applications. This document discusses a quick, simple arroyo to image and video capture and outlines an advanced approach for creating custom photographic camera experiences for your users.

Annotation: This page describes the Camera form, which has been deprecated. We recommend using the CameraX Jetpack library or, for specific use cases, the camera2, course. Both CameraX and Camera2 piece of work on Android 5.0 (API level 21) and higher.

Considerations

Before enabling your application to use cameras on Android devices, you should consider a few questions almost how your app intends to employ this hardware characteristic.

- Camera Requirement - Is the use of a photographic camera then important to your application that you do not want your application installed on a device that does not have a photographic camera? If so, you should declare the camera requirement in your manifest.

- Quick Moving picture or Customized Camera - How volition your application apply the camera? Are y'all just interested in snapping a quick picture show or video clip, or will your application provide a new style to utilize cameras? For getting a quick snap or clip, consider Using Existing Camera Apps. For developing a customized photographic camera feature, cheque out the Building a Camera App section.

- Foreground Services Requirement - When does your app interact with the photographic camera? On Android nine (API level 28) and subsequently, apps running in the background cannot access the photographic camera. Therefore, y'all should use the photographic camera either when your app is in the foreground or equally function of a foreground service.

- Storage - Are the images or videos your application generates intended to exist merely visible to your application or shared so that other applications such every bit Gallery or other media and social apps tin employ them? Do you want the pictures and videos to be available even if your application is uninstalled? Check out the Saving Media Files section to come across how to implement these options.

The basics

The Android framework supports capturing images and video through the android.hardware.camera2 API or photographic camera Intent. Here are the relevant classes:

-

android.hardware.camera2 - This package is the primary API for decision-making device cameras. It can be used to take pictures or videos when yous are building a camera application.

-

Photographic camera - This class is the older deprecated API for controlling device cameras.

-

SurfaceView - This class is used to present a live camera preview to the user.

-

MediaRecorder - This class is used to tape video from the camera.

-

Intent - An intent action type of

MediaStore.ACTION_IMAGE_CAPTUREorMediaStore.ACTION_VIDEO_CAPTUREcan be used to capture images or videos without directly using thePhotographic cameraobject.

Manifest declarations

Earlier starting evolution on your application with the Camera API, you should make sure your manifest has the appropriate declarations to let utilise of camera hardware and other related features.

- Photographic camera Permission - Your application must request permission to use a device photographic camera.

<uses-permission android:proper name="android.permission.Photographic camera" />

Note: If you are using the camera by invoking an existing camera app, your awarding does not need to request this permission.

- Camera Features - Your application must as well declare use of camera features, for example:

<uses-feature android:name="android.hardware.camera" />

For a listing of camera features, run into the manifest Features Reference.

Adding photographic camera features to your manifest causes Google Play to forestall your awarding from being installed to devices that practice not include a photographic camera or practice not support the camera features you specify. For more information well-nigh using feature-based filtering with Google Play, meet Google Play and Feature-Based Filtering.

If your application can use a camera or photographic camera characteristic for proper performance, just does not crave it, y'all should specify this in the manifest by including the

android:requiredattribute, and setting it tofaux:<uses-characteristic android:name="android.hardware.camera" android:required="faux" />

- Storage Permission - Your application tin save images or videos to the device's external storage (SD Carte du jour) if it targets Android 10 (API level 29) or lower and specifies the following in the manifest.

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

- Audio Recording Permission - For recording audio with video capture, your application must request the audio capture permission.

<uses-permission android:proper name="android.permission.RECORD_AUDIO" />

-

Location Permission - If your application tags images with GPS location information, yous must request the

ACCESS_FINE_LOCATIONpermission. Note that, if your app targets Android 5.0 (API level 21) or higher, you also need to declare that your app uses the device's GPS:<uses-permission android:proper noun="android.permission.ACCESS_FINE_LOCATION" /> ... <!-- Needed merely if your app targets Android 5.0 (API level 21) or college. --> <uses-feature android:name="android.hardware.location.gps" />

For more data about getting user location, run across Location Strategies.

Using existing photographic camera apps

A quick way to enable taking pictures or videos in your application without a lot of extra lawmaking is to use an Intent to invoke an existing Android camera application. The details are described in the grooming lessons Taking Photos Simply and Recording Videos Simply.

Building a camera app

Some developers may require a photographic camera user interface that is customized to the wait of their application or provides special features. Writing your own motion-picture show-taking code can provide a more compelling experience for your users.

Note: The post-obit guide is for the older, deprecated Photographic camera API. For new or advanced camera applications, the newer android.hardware.camera2 API is recommended.

The full general steps for creating a custom camera interface for your awarding are every bit follows:

- Notice and Access Camera - Create code to bank check for the existence of cameras and request access.

- Create a Preview Course - Create a photographic camera preview course that extends

SurfaceViewand implements theSurfaceHolderinterface. This course previews the alive images from the photographic camera. - Build a Preview Layout - In one case you have the camera preview class, create a view layout that incorporates the preview and the user interface controls yous want.

- Setup Listeners for Capture - Connect listeners for your interface controls to start prototype or video capture in response to user actions, such every bit pressing a button.

- Capture and Save Files - Setup the code for capturing pictures or videos and saving the output.

- Release the Camera - After using the camera, your awarding must properly release information technology for use by other applications.

Photographic camera hardware is a shared resource that must be carefully managed so your application does not collide with other applications that may also want to use information technology. The following sections discusses how to detect camera hardware, how to request access to a photographic camera, how to capture pictures or video and how to release the camera when your awarding is washed using it.

Circumspection: Remember to release the Camera object by calling the Camera.release() when your application is done using it! If your awarding does not properly release the camera, all subsequent attempts to access the camera, including those past your own application, will fail and may cause your or other applications to exist shut downwardly.

Detecting camera hardware

If your application does not specifically require a camera using a manifest proclamation, you should cheque to come across if a camera is available at runtime. To perform this check, apply the PackageManager.hasSystemFeature() method, as shown in the example code beneath:

Kotlin

/** Check if this device has a camera */ private fun checkCameraHardware(context: Context): Boolean { if (context.packageManager.hasSystemFeature(PackageManager.FEATURE_CAMERA)) { // this device has a camera return true } else { // no camera on this device return false } } Coffee

/** Check if this device has a camera */ private boolean checkCameraHardware(Context context) { if (context.getPackageManager().hasSystemFeature(PackageManager.FEATURE_CAMERA)){ // this device has a camera return true; } else { // no camera on this device render false; } } Android devices can have multiple cameras, for example a back-facing camera for photography and a front-facing camera for video calls. Android 2.3 (API Level 9) and later allows you to check the number of cameras bachelor on a device using the Camera.getNumberOfCameras() method.

Accessing cameras

If you have determined that the device on which your application is running has a camera, you must asking to access it by getting an case of Photographic camera (unless yous are using an intent to access the camera).

To access the primary camera, utilize the Photographic camera.open() method and exist certain to grab any exceptions, every bit shown in the code below:

Kotlin

/** A prophylactic mode to get an instance of the Camera object. */ fun getCameraInstance(): Photographic camera? { return attempt { Photographic camera.open() // attempt to get a Camera instance } catch (e: Exception) { // Camera is non available (in use or does non exist) cipher // returns null if photographic camera is unavailable } } Java

/** A safe way to get an instance of the Photographic camera object. */ public static Camera getCameraInstance(){ Camera c = cipher; attempt { c = Photographic camera.open up(); // attempt to go a Camera example } catch (Exception eastward){ // Camera is non available (in use or does not exist) } return c; // returns null if camera is unavailable } Caution: Ever check for exceptions when using Camera.open up(). Declining to check for exceptions if the photographic camera is in use or does not exist will crusade your application to be close down by the system.

On devices running Android 2.iii (API Level ix) or college, you lot tin can admission specific cameras using Camera.open(int). The example code above will access the offset, back-facing photographic camera on a device with more than one camera.

Checking camera features

Once you obtain access to a camera, you lot tin can become farther information about its capabilities using the Camera.getParameters() method and checking the returned Photographic camera.Parameters object for supported capabilities. When using API Level 9 or higher, utilize the Photographic camera.getCameraInfo() to decide if a camera is on the front or back of the device, and the orientation of the image.

Creating a preview class

For users to effectively take pictures or video, they must be able to see what the device camera sees. A camera preview course is a SurfaceView that can brandish the live image data coming from a photographic camera, so users can frame and capture a picture or video.

The following example lawmaking demonstrates how to create a basic photographic camera preview grade that can be included in a View layout. This class implements SurfaceHolder.Callback in order to capture the callback events for creating and destroying the view, which are needed for assigning the camera preview input.

Kotlin

/** A basic Camera preview course */ class CameraPreview( context: Context, private val mCamera: Photographic camera ) : SurfaceView(context), SurfaceHolder.Callback { private val mHolder: SurfaceHolder = holder.apply { // Install a SurfaceHolder.Callback so we get notified when the // underlying surface is created and destroyed. addCallback(this@CameraPreview) // deprecated setting, merely required on Android versions prior to iii.0 setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS) } override fun surfaceCreated(holder: SurfaceHolder) { // The Surface has been created, now tell the camera where to draw the preview. mCamera.utilise { try { setPreviewDisplay(holder) startPreview() } catch (due east: IOException) { Log.d(TAG, "Mistake setting camera preview: ${e.bulletin}") } } } override fun surfaceDestroyed(holder: SurfaceHolder) { // empty. Take care of releasing the Camera preview in your action. } override fun surfaceChanged(holder: SurfaceHolder, format: Int, west: Int, h: Int) { // If your preview tin can change or rotate, accept care of those events hither. // Make sure to terminate the preview before resizing or reformatting it. if (mHolder.surface == nothing) { // preview surface does not exist return } // stop preview earlier making changes try { mCamera.stopPreview() } grab (e: Exception) { // ignore: tried to end a non-existent preview } // set preview size and make whatever resize, rotate or // reformatting changes here // showtime preview with new settings mCamera.utilize { attempt { setPreviewDisplay(mHolder) startPreview() } catch (east: Exception) { Log.d(TAG, "Mistake starting photographic camera preview: ${e.message}") } } } } Coffee

/** A bones Camera preview class */ public class CameraPreview extends SurfaceView implements SurfaceHolder.Callback { individual SurfaceHolder mHolder; private Camera mCamera; public CameraPreview(Context context, Photographic camera camera) { super(context); mCamera = camera; // Install a SurfaceHolder.Callback so we get notified when the // underlying surface is created and destroyed. mHolder = getHolder(); mHolder.addCallback(this); // deprecated setting, but required on Android versions prior to three.0 mHolder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS); } public void surfaceCreated(SurfaceHolder holder) { // The Surface has been created, at present tell the camera where to draw the preview. try { mCamera.setPreviewDisplay(holder); mCamera.startPreview(); } grab (IOException e) { Log.d(TAG, "Error setting camera preview: " + e.getMessage()); } } public void surfaceDestroyed(SurfaceHolder holder) { // empty. Accept care of releasing the Photographic camera preview in your activity. } public void surfaceChanged(SurfaceHolder holder, int format, int w, int h) { // If your preview can change or rotate, take care of those events here. // Make certain to end the preview before resizing or reformatting it. if (mHolder.getSurface() == null){ // preview surface does not be render; } // stop preview before making changes attempt { mCamera.stopPreview(); } grab (Exception e){ // ignore: tried to cease a non-real preview } // set preview size and brand any resize, rotate or // reformatting changes here // start preview with new settings try { mCamera.setPreviewDisplay(mHolder); mCamera.startPreview(); } grab (Exception due east){ Log.d(TAG, "Error starting photographic camera preview: " + e.getMessage()); } } } If you want to set up a specific size for your camera preview, set this in the surfaceChanged() method as noted in the comments to a higher place. When setting preview size, you must use values from getSupportedPreviewSizes(). Do not prepare arbitrary values in the setPreviewSize() method.

Note: With the introduction of the Multi-Window characteristic in Android seven.0 (API level 24) and higher, you lot tin no longer assume the attribute ratio of the preview is the same equally your activity even afterwards calling setDisplayOrientation(). Depending on the window size and aspect ratio, you may may take to fit a broad camera preview into a portrait-orientated layout, or vice versa, using a letterbox layout.

Placing preview in a layout

A camera preview grade, such as the example shown in the previous department, must exist placed in the layout of an activeness along with other user interface controls for taking a picture or video. This department shows you how to build a basic layout and activeness for the preview.

The following layout code provides a very bones view that can be used to brandish a camera preview. In this case, the FrameLayout element is meant to be the container for the photographic camera preview form. This layout type is used and so that additional picture show information or controls can exist overlaid on the live camera preview images.

<?xml version="1.0" encoding="utf-viii"?> <LinearLayout xmlns:android="http://schemas.android.com/apk/res/android" android:orientation="horizontal" android:layout_width="fill_parent" android:layout_height="fill_parent" > <FrameLayout android:id="@+id/camera_preview" android:layout_width="fill_parent" android:layout_height="fill_parent" android:layout_weight="i" /> <Button android:id="@+id/button_capture" android:text="Capture" android:layout_width="wrap_content" android:layout_height="wrap_content" android:layout_gravity="center" /> </LinearLayout>

On most devices, the default orientation of the camera preview is mural. This case layout specifies a horizontal (mural) layout and the code below fixes the orientation of the awarding to mural. For simplicity in rendering a camera preview, you should alter your application's preview activity orientation to mural by adding the following to your manifest.

<activity android:name=".CameraActivity" android:characterization="@cord/app_name" android:screenOrientation="landscape"> <!-- configure this activity to use mural orientation --> <intent-filter> <activeness android:name="android.intent.action.MAIN" /> <category android:name="android.intent.category.LAUNCHER" /> </intent-filter> </activity>

Note: A photographic camera preview does not have to exist in landscape mode. Starting in Android 2.2 (API Level 8), you can use the setDisplayOrientation() method to fix the rotation of the preview prototype. In guild to change preview orientation every bit the user re-orients the phone, within the surfaceChanged() method of your preview course, beginning stop the preview with Camera.stopPreview() change the orientation and then start the preview again with Camera.startPreview().

In the activity for your camera view, add your preview grade to the FrameLayout element shown in the example above. Your camera activity must too ensure that information technology releases the camera when it is paused or shut down. The following example shows how to modify a camera activity to adhere the preview class shown in Creating a preview form.

Kotlin

course CameraActivity : Activity() { individual var mCamera: Camera? = null private var mPreview: CameraPreview? = nada override fun onCreate(savedInstanceState: Bundle?) { super.onCreate(savedInstanceState) setContentView(R.layout.activity_main) // Create an instance of Camera mCamera = getCameraInstance() mPreview = mCamera?.let { // Create our Preview view CameraPreview(this, it) } // Set the Preview view as the content of our activity. mPreview?.also { val preview: FrameLayout = findViewById(R.id.camera_preview) preview.addView(information technology) } } } Java

public class CameraActivity extends Action { private Camera mCamera; individual CameraPreview mPreview; @Override public void onCreate(Package savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.main); // Create an case of Camera mCamera = getCameraInstance(); // Create our Preview view and set it as the content of our action. mPreview = new CameraPreview(this, mCamera); FrameLayout preview = (FrameLayout) findViewById(R.id.camera_preview); preview.addView(mPreview); } } Note: The getCameraInstance() method in the example in a higher place refers to the case method shown in Accessing cameras.

Capturing pictures

Once you take built a preview form and a view layout in which to brandish information technology, yous are ready to showtime capturing images with your application. In your awarding code, you must gear up listeners for your user interface controls to reply to a user action past taking a picture.

In gild to retrieve a picture, apply the Camera.takePicture() method. This method takes three parameters which receive data from the camera. In order to receive data in a JPEG format, yous must implement an Camera.PictureCallback interface to receive the image data and write it to a file. The following code shows a bones implementation of the Camera.PictureCallback interface to relieve an image received from the camera.

Kotlin

private val mPicture = Camera.PictureCallback { data, _ -> val pictureFile: File = getOutputMediaFile(MEDIA_TYPE_IMAGE) ?: run { Log.d(TAG, ("Error creating media file, check storage permissions")) return@PictureCallback } try { val fos = FileOutputStream(pictureFile) fos.write(information) fos.shut() } catch (e: FileNotFoundException) { Log.d(TAG, "File not institute: ${e.message}") } catch (e: IOException) { Log.d(TAG, "Mistake accessing file: ${eastward.message}") } } Coffee

private PictureCallback mPicture = new PictureCallback() { @Override public void onPictureTaken(byte[] data, Photographic camera camera) { File pictureFile = getOutputMediaFile(MEDIA_TYPE_IMAGE); if (pictureFile == cipher){ Log.d(TAG, "Error creating media file, check storage permissions"); return; } endeavor { FileOutputStream fos = new FileOutputStream(pictureFile); fos.write(data); fos.close(); } catch (FileNotFoundException e) { Log.d(TAG, "File non found: " + e.getMessage()); } catch (IOException due east) { Log.d(TAG, "Fault accessing file: " + east.getMessage()); } } }; Trigger capturing an image by calling the Camera.takePicture() method. The post-obit example code shows how to call this method from a push View.OnClickListener.

Kotlin

val captureButton: Button = findViewById(R.id.button_capture) captureButton.setOnClickListener { // get an epitome from the camera mCamera?.takePicture(nix, null, picture) } Java

// Add a listener to the Capture button Button captureButton = (Button) findViewById(R.id.button_capture); captureButton.setOnClickListener( new View.OnClickListener() { @Override public void onClick(View 5) { // become an paradigm from the camera mCamera.takePicture(cypher, null, picture); } } ); Note: The mPicture member in the post-obit case refers to the example code above.

Caution: Think to release the Camera object by calling the Camera.release() when your application is done using it! For information about how to release the camera, run into Releasing the camera.

Capturing videos

Video capture using the Android framework requires conscientious management of the Photographic camera object and coordination with the MediaRecorder grade. When recording video with Camera, you lot must manage the Photographic camera.lock() and Camera.unlock() calls to allow MediaRecorder admission to the photographic camera hardware, in improver to the Camera.open() and Camera.release() calls.

Note: Starting with Android 4.0 (API level xiv), the Photographic camera.lock() and Camera.unlock() calls are managed for y'all automatically.

Different taking pictures with a device photographic camera, capturing video requires a very particular call order. You must follow a specific social club of execution to successfully prepare for and capture video with your application, every bit detailed below.

- Open Camera - Use the

Camera.open()to get an example of the photographic camera object. - Connect Preview - Prepare a live camera image preview by connecting a

SurfaceViewto the photographic camera usingCamera.setPreviewDisplay(). - Start Preview - Call

Camera.startPreview()to begin displaying the live camera images. - Start Recording Video - The following steps must be completed in order to successfully record video:

- Unlock the Camera - Unlock the camera for use by

MediaRecorderpast callingCamera.unlock(). - Configure MediaRecorder - Telephone call in the following

MediaRecordermethods in this society. For more information, see theMediaRecorderreference documentation.-

setCamera()- Set the camera to be used for video capture, use your application'due south current instance ofCamera. -

setAudioSource()- Prepare the audio source, useMediaRecorder.AudioSource.CAMCORDER. -

setVideoSource()- Set up the video source, applyMediaRecorder.VideoSource.Camera. - Set the video output format and encoding. For Android two.2 (API Level 8) and higher, apply the

MediaRecorder.setProfilemethod, and get a contour instance usingCamcorderProfile.get(). For versions of Android prior to ii.ii, you must prepare the video output format and encoding parameters:-

setOutputFormat()- Ready the output format, specify the default setting orMediaRecorder.OutputFormat.MPEG_4. -

setAudioEncoder()- Ready the sound encoding type, specify the default setting orMediaRecorder.AudioEncoder.AMR_NB. -

setVideoEncoder()- Prepare the video encoding blazon, specify the default setting orMediaRecorder.VideoEncoder.MPEG_4_SP.

-

-

setOutputFile()- Set up the output file, usegetOutputMediaFile(MEDIA_TYPE_VIDEO).toString()from the example method in the Saving Media Files department. -

setPreviewDisplay()- Specify theSurfaceViewpreview layout chemical element for your awarding. Use the aforementioned object you lot specified for Connect Preview.

Circumspection: You must call these

MediaRecorderconfiguration methods in this order, otherwise your application will encounter errors and the recording will fail. -

- Prepare MediaRecorder - Prepare the

MediaRecorderwith provided configuration settings past callingMediaRecorder.prepare(). - Start MediaRecorder - Start recording video by calling

MediaRecorder.start().

- Unlock the Camera - Unlock the camera for use by

- Finish Recording Video - Call the following methods in gild, to successfully complete a video recording:

- Stop MediaRecorder - Finish recording video past calling

MediaRecorder.finish(). - Reset MediaRecorder - Optionally, remove the configuration settings from the recorder by calling

MediaRecorder.reset(). - Release MediaRecorder - Release the

MediaRecorderpast callingMediaRecorder.release(). - Lock the Camera - Lock the camera so that futurity

MediaRecordersessions tin can use it by callingCamera.lock(). Starting with Android 4.0 (API level fourteen), this call is not required unless theMediaRecorder.prepare()phone call fails.

- Stop MediaRecorder - Finish recording video past calling

- Stop the Preview - When your activeness has finished using the photographic camera, stop the preview using

Photographic camera.stopPreview(). - Release Camera - Release the camera then that other applications can use it past calling

Photographic camera.release().

Note: It is possible to use MediaRecorder without creating a camera preview kickoff and skip the first few steps of this process. Yet, since users typically prefer to see a preview before starting a recording, that process is non discussed hither.

Tip: If your awarding is typically used for recording video, set setRecordingHint(boolean) to true prior to starting your preview. This setting tin can help reduce the time it takes to start recording.

Configuring MediaRecorder

When using the MediaRecorder class to record video, you must perform configuration steps in a specific order and then telephone call the MediaRecorder.prepare() method to bank check and implement the configuration. The following case code demonstrates how to properly configure and prepare the MediaRecorder class for video recording.

Kotlin

private fun prepareVideoRecorder(): Boolean { mediaRecorder = MediaRecorder() mCamera?.allow { photographic camera -> // Step 1: Unlock and set camera to MediaRecorder photographic camera?.unlock() mediaRecorder?.run { setCamera(camera) // Step 2: Set sources setAudioSource(MediaRecorder.AudioSource.CAMCORDER) setVideoSource(MediaRecorder.VideoSource.CAMERA) // Footstep 3: Gear up a CamcorderProfile (requires API Level 8 or higher) setProfile(CamcorderProfile.become(CamcorderProfile.QUALITY_HIGH)) // Pace 4: Prepare output file setOutputFile(getOutputMediaFile(MEDIA_TYPE_VIDEO).toString()) // Stride 5: Set the preview output setPreviewDisplay(mPreview?.holder?.surface) setOutputFormat(MediaRecorder.OutputFormat.MPEG_4) setAudioEncoder(MediaRecorder.AudioEncoder.DEFAULT) setVideoEncoder(MediaRecorder.VideoEncoder.DEFAULT) // Step 6: Prepare configured MediaRecorder return try { prepare() truthful } catch (due east: IllegalStateException) { Log.d(TAG, "IllegalStateException preparing MediaRecorder: ${e.message}") releaseMediaRecorder() false } grab (e: IOException) { Log.d(TAG, "IOException preparing MediaRecorder: ${e.bulletin}") releaseMediaRecorder() false } } } return simulated } Java

private boolean prepareVideoRecorder(){ mCamera = getCameraInstance(); mediaRecorder = new MediaRecorder(); // Step 1: Unlock and set photographic camera to MediaRecorder mCamera.unlock(); mediaRecorder.setCamera(mCamera); // Step 2: Set sources mediaRecorder.setAudioSource(MediaRecorder.AudioSource.CAMCORDER); mediaRecorder.setVideoSource(MediaRecorder.VideoSource.CAMERA); // Step iii: Set a CamcorderProfile (requires API Level 8 or college) mediaRecorder.setProfile(CamcorderProfile.get(CamcorderProfile.QUALITY_HIGH)); // Stride iv: Prepare output file mediaRecorder.setOutputFile(getOutputMediaFile(MEDIA_TYPE_VIDEO).toString()); // Step 5: Gear up the preview output mediaRecorder.setPreviewDisplay(mPreview.getHolder().getSurface()); // Step vi: Fix configured MediaRecorder try { mediaRecorder.fix(); } grab (IllegalStateException e) { Log.d(TAG, "IllegalStateException preparing MediaRecorder: " + e.getMessage()); releaseMediaRecorder(); return false; } catch (IOException east) { Log.d(TAG, "IOException preparing MediaRecorder: " + due east.getMessage()); releaseMediaRecorder(); render false; } render true; } Prior to Android 2.two (API Level viii), you must set the output format and encoding formats parameters directly, instead of using CamcorderProfile. This approach is demonstrated in the following lawmaking:

Kotlin

// Stride 3: Set output format and encoding (for versions prior to API Level 8) mediaRecorder?.apply { setOutputFormat(MediaRecorder.OutputFormat.MPEG_4) setAudioEncoder(MediaRecorder.AudioEncoder.DEFAULT) setVideoEncoder(MediaRecorder.VideoEncoder.DEFAULT) } Java

// Step three: Set output format and encoding (for versions prior to API Level 8) mediaRecorder.setOutputFormat(MediaRecorder.OutputFormat.MPEG_4); mediaRecorder.setAudioEncoder(MediaRecorder.AudioEncoder.DEFAULT); mediaRecorder.setVideoEncoder(MediaRecorder.VideoEncoder.DEFAULT);

The following video recording parameters for MediaRecorder are given default settings, nonetheless, you may want to adjust these settings for your awarding:

-

setVideoEncodingBitRate() -

setVideoSize() -

setVideoFrameRate() -

setAudioEncodingBitRate() -

setAudioChannels() -

setAudioSamplingRate()

Starting and stopping MediaRecorder

When starting and stopping video recording using the MediaRecorder class, y'all must follow a specific order, as listed below.

- Unlock the camera with

Camera.unlock() - Configure

MediaRecorderas shown in the code example above - Start recording using

MediaRecorder.get-go() - Record the video

- Stop recording using

MediaRecorder.stop() - Release the media recorder with

MediaRecorder.release() - Lock the camera using

Camera.lock()

The following example code demonstrates how to wire up a button to properly outset and terminate video recording using the camera and the MediaRecorder form.

Note: When completing a video recording, do non release the camera or else your preview volition be stopped.

Kotlin

var isRecording = faux val captureButton: Push = findViewById(R.id.button_capture) captureButton.setOnClickListener { if (isRecording) { // stop recording and release photographic camera mediaRecorder?.end() // terminate the recording releaseMediaRecorder() // release the MediaRecorder object mCamera?.lock() // accept camera admission back from MediaRecorder // inform the user that recording has stopped setCaptureButtonText("Capture") isRecording = false } else { // initialize video camera if (prepareVideoRecorder()) { // Camera is available and unlocked, MediaRecorder is prepared, // now you tin can showtime recording mediaRecorder?.commencement() // inform the user that recording has started setCaptureButtonText("Finish") isRecording = truthful } else { // prepare didn't work, release the camera releaseMediaRecorder() // inform user } } } Java

private boolean isRecording = false; // Add together a listener to the Capture button Button captureButton = (Button) findViewById(id.button_capture); captureButton.setOnClickListener( new View.OnClickListener() { @Override public void onClick(View 5) { if (isRecording) { // stop recording and release camera mediaRecorder.stop(); // stop the recording releaseMediaRecorder(); // release the MediaRecorder object mCamera.lock(); // take camera access back from MediaRecorder // inform the user that recording has stopped setCaptureButtonText("Capture"); isRecording = false; } else { // initialize video camera if (prepareVideoRecorder()) { // Camera is available and unlocked, MediaRecorder is prepared, // at present you can start recording mediaRecorder.starting time(); // inform the user that recording has started setCaptureButtonText("Stop"); isRecording = true; } else { // set up didn't work, release the camera releaseMediaRecorder(); // inform user } } } } ); Note: In the in a higher place example, the prepareVideoRecorder() method refers to the example code shown in Configuring MediaRecorder. This method takes care of locking the camera, configuring and preparing the MediaRecorder instance.

Releasing the camera

Cameras are a resources that is shared by applications on a device. Your application can make use of the camera after getting an example of Camera, and you must be especially conscientious to release the camera object when your application stops using it, and equally soon equally your awarding is paused (Activity.onPause()). If your awarding does not properly release the photographic camera, all subsequent attempts to access the camera, including those by your own awarding, volition neglect and may crusade your or other applications to be shut down.

To release an instance of the Camera object, use the Camera.release() method, as shown in the example code below.

Kotlin

class CameraActivity : Action() { private var mCamera: Camera? private var preview: SurfaceView? private var mediaRecorder: MediaRecorder? override fun onPause() { super.onPause() releaseMediaRecorder() // if you are using MediaRecorder, release information technology commencement releaseCamera() // release the camera immediately on intermission outcome } private fun releaseMediaRecorder() { mediaRecorder?.reset() // articulate recorder configuration mediaRecorder?.release() // release the recorder object mediaRecorder = null mCamera?.lock() // lock photographic camera for later on use } individual fun releaseCamera() { mCamera?.release() // release the photographic camera for other applications mCamera = null } } Java

public class CameraActivity extends Activity { private Camera mCamera; private SurfaceView preview; individual MediaRecorder mediaRecorder; ... @Override protected void onPause() { super.onPause(); releaseMediaRecorder(); // if you lot are using MediaRecorder, release it get-go releaseCamera(); // release the photographic camera immediately on interruption outcome } individual void releaseMediaRecorder(){ if (mediaRecorder != null) { mediaRecorder.reset(); // clear recorder configuration mediaRecorder.release(); // release the recorder object mediaRecorder = null; mCamera.lock(); // lock camera for later employ } } private void releaseCamera(){ if (mCamera != null){ mCamera.release(); // release the camera for other applications mCamera = null; } } } Caution: If your application does not properly release the photographic camera, all subsequent attempts to access the camera, including those by your own application, will fail and may cause your or other applications to be shut downwards.

Media files created by users such equally pictures and videos should be saved to a device's external storage directory (SD Card) to conserve organization infinite and to allow users to access these files without their device. There are many possible directory locations to save media files on a device, however there are but two standard locations y'all should consider as a developer:

-

Environment.getExternalStoragePublicDirectory(Surroundings.DIRECTORY_PICTURES) - This method returns the standard, shared and recommended location for saving pictures and videos. This directory is shared (public), so other applications can easily discover, read, change and delete files saved in this location. If your awarding is uninstalled by the user, media files saved to this location volition not be removed. To avoid interfering with users existing pictures and videos, yous should create a sub-directory for your application'south media files within this directory, as shown in the lawmaking sample below. This method is available in Android two.2 (API Level 8), for equivalent calls in earlier API versions, see Saving Shared Files. -

Context.getExternalFilesDir(Surroundings.DIRECTORY_PICTURES) - This method returns a standard location for saving pictures and videos which are associated with your application. If your awarding is uninstalled, whatsoever files saved in this location are removed. Security is not enforced for files in this location and other applications may read, change and delete them.

The following instance lawmaking demonstrates how to create a File or Uri location for a media file that tin exist used when invoking a device's photographic camera with an Intent or as part of a Edifice a Camera App.

Kotlin

val MEDIA_TYPE_IMAGE = 1 val MEDIA_TYPE_VIDEO = two /** Create a file Uri for saving an image or video */ private fun getOutputMediaFileUri(type: Int): Uri { render Uri.fromFile(getOutputMediaFile(type)) } /** Create a File for saving an image or video */ individual fun getOutputMediaFile(blazon: Int): File? { // To be safe, yous should check that the SDCard is mounted // using Environment.getExternalStorageState() before doing this. val mediaStorageDir = File( Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_PICTURES), "MyCameraApp" ) // This location works best if you want the created images to exist shared // betwixt applications and persist later your app has been uninstalled. // Create the storage directory if it does not exist mediaStorageDir.apply { if (!exists()) { if (!mkdirs()) { Log.d("MyCameraApp", "failed to create directory") return nada } } } // Create a media file proper noun val timeStamp = SimpleDateFormat("yyyyMMdd_HHmmss").format(Date()) return when (type) { MEDIA_TYPE_IMAGE -> { File("${mediaStorageDir.path}${File.separator}IMG_$timeStamp.jpg") } MEDIA_TYPE_VIDEO -> { File("${mediaStorageDir.path}${File.separator}VID_$timeStamp.mp4") } else -> goose egg } } Java

public static final int MEDIA_TYPE_IMAGE = 1; public static terminal int MEDIA_TYPE_VIDEO = two; /** Create a file Uri for saving an paradigm or video */ private static Uri getOutputMediaFileUri(int type){ render Uri.fromFile(getOutputMediaFile(type)); } /** Create a File for saving an image or video */ private static File getOutputMediaFile(int blazon){ // To be safe, you should check that the SDCard is mounted // using Environment.getExternalStorageState() before doing this. File mediaStorageDir = new File(Environment.getExternalStoragePublicDirectory( Environment.DIRECTORY_PICTURES), "MyCameraApp"); // This location works best if you want the created images to be shared // between applications and persist after your app has been uninstalled. // Create the storage directory if it does non exist if (! mediaStorageDir.exists()){ if (! mediaStorageDir.mkdirs()){ Log.d("MyCameraApp", "failed to create directory"); return null; } } // Create a media file proper noun String timeStamp = new SimpleDateFormat("yyyyMMdd_HHmmss").format(new Date()); File mediaFile; if (blazon == MEDIA_TYPE_IMAGE){ mediaFile = new File(mediaStorageDir.getPath() + File.separator + "IMG_"+ timeStamp + ".jpg"); } else if(blazon == MEDIA_TYPE_VIDEO) { mediaFile = new File(mediaStorageDir.getPath() + File.separator + "VID_"+ timeStamp + ".mp4"); } else { return null; } return mediaFile; } Note: Environment.getExternalStoragePublicDirectory() is available in Android two.2 (API Level 8) or higher. If you are targeting devices with earlier versions of Android, use Surround.getExternalStorageDirectory() instead. For more than information, see Saving Shared Files.

To make the URI support work profiles, first catechumen the file URI to a content URI. And so, add together the content URI to EXTRA_OUTPUT of an Intent.

For more data about saving files on an Android device, see Data Storage.

Photographic camera features

Android supports a wide array of photographic camera features y'all tin control with your camera application, such as picture show format, flash mode, focus settings, and many more. This section lists the mutual camera features, and briefly discusses how to apply them. Most camera features tin can be accessed and ready using the through Camera.Parameters object. Nonetheless, in that location are several important features that require more than simple settings in Photographic camera.Parameters. These features are covered in the following sections:

- Metering and focus areas

- Face detection

- Fourth dimension lapse video

For full general information about how to employ features that are controlled through Camera.Parameters, review the Using camera features department. For more detailed information about how to use features controlled through the photographic camera parameters object, follow the links in the feature list below to the API reference documentation.

Table one. Common camera features sorted by the Android API Level in which they were introduced.

| Characteristic | API Level | Description |

|---|---|---|

| Confront Detection | 14 | Place homo faces within a motion-picture show and use them for focus, metering and white rest |

| Metering Areas | xiv | Specify 1 or more areas within an epitome for computing white residuum |

| Focus Areas | xiv | Gear up 1 or more areas within an image to apply for focus |

White Balance Lock | fourteen | Terminate or get-go automatic white balance adjustments |

Exposure Lock | 14 | Stop or start automatic exposure adjustments |

Video Snapshot | 14 | Take a picture while shooting video (frame grab) |

| Time Lapse Video | 11 | Record frames with set delays to record a time lapse video |

Multiple Cameras | ix | Back up for more than one camera on a device, including front-facing and dorsum-facing cameras |

Focus Distance | 9 | Reports distances between the camera and objects that announced to be in focus |

Zoom | 8 | Fix paradigm magnification |

Exposure Compensation | 8 | Increase or subtract the calorie-free exposure level |

GPS Data | 5 | Include or omit geographic location data with the image |

White Balance | v | Prepare the white residual mode, which affects colour values in the captured image |

Focus Manner | 5 | Set how the photographic camera focuses on a subject field such as automatic, fixed, macro or infinity |

Scene Fashion | 5 | Apply a preset fashion for specific types of photography situations such every bit night, beach, snowfall or candlelight scenes |

JPEG Quality | 5 | Set the compression level for a JPEG image, which increases or decreases image output file quality and size |

Flash Mode | v | Plough flash on, off, or utilize automatic setting |

Color Furnishings | v | Use a color effect to the captured epitome such every bit blackness and white, sepia tone or negative. |

Anti-Banding | v | Reduces the issue of banding in colour gradients due to JPEG pinch |

Picture Format | ane | Specify the file format for the picture |

Picture show Size | 1 | Specify the pixel dimensions of the saved picture |

Note: These features are not supported on all devices due to hardware differences and software implementation. For information on checking the availability of features on the device where your application is running, run across Checking characteristic availability.

Checking characteristic availability

The beginning thing to understand when setting out to utilise camera features on Android devices is that not all photographic camera features are supported on all devices. In addition, devices that back up a particular characteristic may back up them to different levels or with unlike options. Therefore, part of your decision process every bit you develop a photographic camera awarding is to decide what photographic camera features y'all want to back up and to what level. After making that decision, you should programme on including code in your camera awarding that checks to see if device hardware supports those features and fails gracefully if a feature is not bachelor.

You tin can bank check the availability of camera features by getting an instance of a camera'southward parameters object, and checking the relevant methods. The post-obit code sample shows yous how to obtain a Camera.Parameters object and check if the camera supports the autofocus feature:

Kotlin

val params: Camera.Parameters? = camera?.parameters val focusModes: List<String>? = params?.supportedFocusModes if (focusModes?.contains(Camera.Parameters.FOCUS_MODE_AUTO) == true) { // Autofocus mode is supported } Java

// go Camera parameters Camera.Parameters params = camera.getParameters(); List<String> focusModes = params.getSupportedFocusModes(); if (focusModes.contains(Photographic camera.Parameters.FOCUS_MODE_AUTO)) { // Autofocus mode is supported } You tin can use the technique shown above for nigh camera features. The Photographic camera.Parameters object provides a getSupported...(), is...Supported() or getMax...() method to make up one's mind if (and to what extent) a feature is supported.

If your application requires sure camera features in order to function properly, you tin can crave them through additions to your awarding manifest. When you declare the employ of specific camera features, such as flash and auto-focus, Google Play restricts your application from existence installed on devices which do not support these features. For a list of camera features that tin can be alleged in your app manifest, see the manifest Features Reference.

Using photographic camera features

Most camera features are activated and controlled using a Camera.Parameters object. Yous obtain this object by first getting an case of the Photographic camera object, calling the getParameters() method, changing the returned parameter object and then setting information technology back into the photographic camera object, as demonstrated in the post-obit example code:

Kotlin

val params: Photographic camera.Parameters? = photographic camera?.parameters params?.focusMode = Camera.Parameters.FOCUS_MODE_AUTO camera?.parameters = params

Coffee

// get Camera parameters Camera.Parameters params = camera.getParameters(); // set the focus mode params.setFocusMode(Camera.Parameters.FOCUS_MODE_AUTO); // set Camera parameters camera.setParameters(params);

This technique works for nearly all camera features, and most parameters can be changed at whatever time after you take obtained an instance of the Camera object. Changes to parameters are typically visible to the user immediately in the awarding'due south camera preview. On the software side, parameter changes may accept several frames to actually take effect as the camera hardware processes the new instructions so sends updated image data.

Important: Some photographic camera features cannot be changed at volition. In particular, changing the size or orientation of the camera preview requires that you first stop the preview, change the preview size, and then restart the preview. Starting with Android iv.0 (API Level 14) preview orientation can be inverse without restarting the preview.

Other camera features require more code in order to implement, including:

- Metering and focus areas

- Face detection

- Time lapse video

A quick outline of how to implement these features is provided in the following sections.

Metering and focus areas

In some photographic scenarios, automatic focusing and light metering may not produce the desired results. Starting with Android four.0 (API Level 14), your camera awarding tin can provide additional controls to let your app or users to specify areas in an epitome to utilise for determining focus or low-cal level settings and pass these values to the camera hardware for apply in capturing images or video.

Areas for metering and focus work very similarly to other camera features, in that you control them through methods in the Camera.Parameters object. The following code demonstrates setting two light metering areas for an instance of Camera:

Kotlin

// Create an instance of Camera camera = getCameraInstance() // set Camera parameters val params: Camera.Parameters? = camera?.parameters params?.apply { if (maxNumMeteringAreas > 0) { // check that metering areas are supported meteringAreas = ArrayList<Camera.Area>().apply { val areaRect1 = Rect(-100, -100, 100, 100) // specify an area in center of prototype add(Photographic camera.Area(areaRect1, 600)) // ready weight to threescore% val areaRect2 = Rect(800, -1000, 1000, -800) // specify an area in upper correct of epitome add(Photographic camera.Area(areaRect2, 400)) // gear up weight to forty% } } camera?.parameters = this } Java

// Create an example of Camera camera = getCameraInstance(); // ready Camera parameters Camera.Parameters params = camera.getParameters(); if (params.getMaxNumMeteringAreas() > 0){ // check that metering areas are supported List<Camera.Area> meteringAreas = new ArrayList<Camera.Area>(); Rect areaRect1 = new Rect(-100, -100, 100, 100); // specify an area in center of epitome meteringAreas.add(new Camera.Area(areaRect1, 600)); // set weight to lx% Rect areaRect2 = new Rect(800, -thousand, chiliad, -800); // specify an area in upper right of image meteringAreas.add together(new Photographic camera.Surface area(areaRect2, 400)); // set weight to forty% params.setMeteringAreas(meteringAreas); } camera.setParameters(params); The Camera.Area object contains two data parameters: A Rect object for specifying an area inside the photographic camera'south field of view and a weight value, which tells the camera what level of importance this area should be given in low-cal metering or focus calculations.

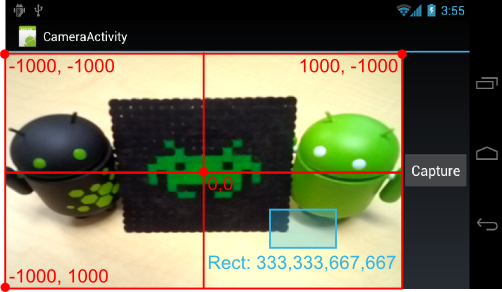

The Rect field in a Camera.Area object describes a rectangular shape mapped on a 2000 ten 2000 unit filigree. The coordinates -1000, -1000 represent the top, left corner of the camera image, and coordinates g, 1000 represent the bottom, right corner of the camera image, equally shown in the illustration below.

Effigy 1. The cherry lines illustrate the coordinate arrangement for specifying a Camera.Expanse inside a camera preview. The blue box shows the location and shape of an camera area with the Rect values 333,333,667,667.

The bounds of this coordinate system e'er represent to the outer edge of the image visible in the photographic camera preview and practise not shrink or aggrandize with the zoom level. Similarly, rotation of the image preview using Camera.setDisplayOrientation() does not remap the coordinate system.

Face detection

For pictures that include people, faces are commonly the near important part of the movie, and should be used for determining both focus and white balance when capturing an paradigm. The Android 4.0 (API Level 14) framework provides APIs for identifying faces and calculating picture settings using face recognition technology.

Note: While the face up detection characteristic is running, setWhiteBalance(String), setFocusAreas(Listing<Camera.Surface area>) and setMeteringAreas(List<Camera.Expanse>) accept no effect.

Using the face detection characteristic in your camera application requires a few general steps:

- Cheque that face detection is supported on the device

- Create a confront detection listener

- Add together the confront detection listener to your camera object

- Showtime face detection afterward preview (and later every preview restart)

The confront detection feature is not supported on all devices. You can check that this characteristic is supported by calling getMaxNumDetectedFaces(). An instance of this bank check is shown in the startFaceDetection() sample method below.

In order to be notified and respond to the detection of a confront, your photographic camera application must set a listener for face detection events. In club to exercise this, you must create a listener class that implements the Camera.FaceDetectionListener interface as shown in the example code below.

Kotlin

internal course MyFaceDetectionListener : Photographic camera.FaceDetectionListener { override fun onFaceDetection(faces: Array<Camera.Face up>, camera: Camera) { if (faces.isNotEmpty()) { Log.d("FaceDetection", ("face detected: ${faces.size}" + " Face i Location X: ${faces[0].rect.centerX()}" + "Y: ${faces[0].rect.centerY()}")) } } } Coffee

class MyFaceDetectionListener implements Camera.FaceDetectionListener { @Override public void onFaceDetection(Face[] faces, Camera photographic camera) { if (faces.length > 0){ Log.d("FaceDetection", "face detected: "+ faces.length + " Face ane Location X: " + faces[0].rect.centerX() + "Y: " + faces[0].rect.centerY() ); } } } Subsequently creating this class, y'all and so set it into your awarding's Camera object, equally shown in the example lawmaking below:

Kotlin

camera?.setFaceDetectionListener(MyFaceDetectionListener())

Java

camera.setFaceDetectionListener(new MyFaceDetectionListener());

Your awarding must beginning the face detection part each time you start (or restart) the camera preview. Create a method for starting face detection so you can phone call it as needed, every bit shown in the instance code beneath.

Kotlin

fun startFaceDetection() { // Try starting Face Detection val params = mCamera?.parameters // start face detection only *afterward* preview has started params?.apply { if (maxNumDetectedFaces > 0) { // camera supports face up detection, so can starting time it: mCamera?.startFaceDetection() } } } Java

public void startFaceDetection(){ // Try starting Confront Detection Camera.Parameters params = mCamera.getParameters(); // outset face detection only *after* preview has started if (params.getMaxNumDetectedFaces() > 0){ // camera supports face detection, so tin can start it: mCamera.startFaceDetection(); } } Yous must beginning face detection each time you kickoff (or restart) the photographic camera preview. If y'all employ the preview class shown in Creating a preview class, add your startFaceDetection() method to both the surfaceCreated() and surfaceChanged() methods in your preview class, as shown in the sample code beneath.

Kotlin

override fun surfaceCreated(holder: SurfaceHolder) { try { mCamera.setPreviewDisplay(holder) mCamera.startPreview() startFaceDetection() // start face up detection characteristic } catch (e: IOException) { Log.d(TAG, "Error setting photographic camera preview: ${e.message}") } } override fun surfaceChanged(holder: SurfaceHolder, format: Int, due west: Int, h: Int) { if (holder.surface == null) { // preview surface does non be Log.d(TAG, "holder.getSurface() == nada") render } endeavor { mCamera.stopPreview() } catch (e: Exception) { // ignore: tried to stop a not-real preview Log.d(TAG, "Error stopping camera preview: ${e.message}") } try { mCamera.setPreviewDisplay(holder) mCamera.startPreview() startFaceDetection() // re-start face up detection feature } catch (e: Exception) { // ignore: tried to stop a non-real preview Log.d(TAG, "Error starting photographic camera preview: ${eastward.message}") } } Java

public void surfaceCreated(SurfaceHolder holder) { try { mCamera.setPreviewDisplay(holder); mCamera.startPreview(); startFaceDetection(); // showtime face up detection feature } catch (IOException eastward) { Log.d(TAG, "Error setting camera preview: " + e.getMessage()); } } public void surfaceChanged(SurfaceHolder holder, int format, int west, int h) { if (holder.getSurface() == null){ // preview surface does not be Log.d(TAG, "holder.getSurface() == cypher"); return; } endeavor { mCamera.stopPreview(); } catch (Exception e){ // ignore: tried to stop a non-real preview Log.d(TAG, "Error stopping camera preview: " + e.getMessage()); } try { mCamera.setPreviewDisplay(holder); mCamera.startPreview(); startFaceDetection(); // re-start face up detection feature } grab (Exception e){ // ignore: tried to stop a non-existent preview Log.d(TAG, "Error starting camera preview: " + eastward.getMessage()); } } Note: Remember to call this method afterwards calling startPreview(). Do not attempt to start face detection in the onCreate() method of your camera app'due south chief activity, equally the preview is not available by this point in your application's the execution.

Time lapse video

Fourth dimension lapse video allows users to create video clips that combine pictures taken a few seconds or minutes apart. This feature uses MediaRecorder to record the images for a time lapse sequence.

To tape a time lapse video with MediaRecorder, you must configure the recorder object as if you are recording a normal video, setting the captured frames per second to a low number and using 1 of the time lapse quality settings, equally shown in the code example below.

Kotlin

mediaRecorder.setProfile(CamcorderProfile.become(CamcorderProfile.QUALITY_TIME_LAPSE_HIGH)) mediaRecorder.setCaptureRate(0.one) // capture a frame every 10 seconds

Java

// Step 3: Set a CamcorderProfile (requires API Level viii or college) mediaRecorder.setProfile(CamcorderProfile.get(CamcorderProfile.QUALITY_TIME_LAPSE_HIGH)); ... // Footstep 5.five: Gear up the video capture charge per unit to a depression number mediaRecorder.setCaptureRate(0.1); // capture a frame every ten seconds

These settings must be done as part of a larger configuration procedure for MediaRecorder. For a full configuration lawmaking instance, see Configuring MediaRecorder. Once the configuration is complete, you start the video recording as if you were recording a normal video clip. For more information most configuring and running MediaRecorder, see Capturing videos.

The Camera2Video and HdrViewfinder samples further demonstrate the use of the APIs covered on this folio.

Camera fields that crave permission

Apps running Android 10 (API level 29) or college must take the Photographic camera permission in order to admission the values of the following fields that the getCameraCharacteristics() method returns:

-

LENS_POSE_ROTATION -

LENS_POSE_TRANSLATION -

LENS_INTRINSIC_CALIBRATION -

LENS_RADIAL_DISTORTION -

LENS_POSE_REFERENCE -

LENS_DISTORTION -

LENS_INFO_HYPERFOCAL_DISTANCE -

LENS_INFO_MINIMUM_FOCUS_DISTANCE -

SENSOR_REFERENCE_ILLUMINANT1 -

SENSOR_REFERENCE_ILLUMINANT2 -

SENSOR_CALIBRATION_TRANSFORM1 -

SENSOR_CALIBRATION_TRANSFORM2 -

SENSOR_COLOR_TRANSFORM1 -

SENSOR_COLOR_TRANSFORM2 -

SENSOR_FORWARD_MATRIX1 -

SENSOR_FORWARD_MATRIX2

Boosted sample code

To download sample apps, see the Camera2Basic sample and Official CameraX sample app.

Source: https://developer.android.com/guide/topics/media/camera

0 Response to "Oh What Are You Doing With the Camera Not Again"

Postar um comentário